Kubernetes - k3s on AlmaLinux

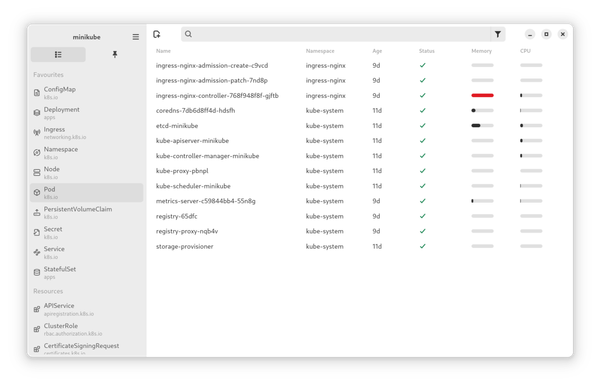

In some past articles, I talked about Kubernetes and how to get started. Minikube is awesome for local development, but what about Kubernetes on small environments? k3s is one of the easiest ways to deploy and run a Kubernetes instance, which can also be used to create high available clusters.

In some past articles, I talked about Kubernetes and how to get started. Minikube is awesome for local development, but what about Kubernetes on small environments? k3s is one of the easiest ways to deploy and run a Kubernetes instance, which can also be used to create high available clusters.

Let's have a look how you can deploy k3s on AlmaLinux.

k3s

k3s is a Kubernetes derivate, that is intended to be minimal and easy to use. For me, both statements are true. One might say: "It's just a ~60MB binary.". In fact, k3s was my first working Kubernetes installation, that brought everything I needed.

This does not mean, that it cannot do a lot of stuff. k3s can be used for a single instance on a Raspberry Pi, but you can also scale it up to a high-available, multi node setup. You will get the Kubernetes metrics server, Traefik as an Ingress controller and overlay networking out of the box, too.

This makes it a perfect fit for IoT use cases, small environments and home labs.

AlmaLinux

AlmaLinux is the spiritual successor of CentOS. With the introduction of CentOS Stream and the placement in front of Red Hat Enterprise Linux (RHEL), CentOS has more moving parts than before. This does not make it unstable or unreliable, but you will see way more updates on a regular basis. Furthermore, some 3rd party products are not used to this fast-paced approach, and you might find issues with these.

For a small production environment, I prefer AlmaLinux, which is a bug-by-bug, feature-by-feature compatible Linux derivate of RHEL. You will get minor updates for 5+ years, very well tested software and a truly awesome community that helps with development, support, and contribution.

I have also addressed AlmaLinux in a dedicated Spotlight article, if you want to know more about it.

Deployment

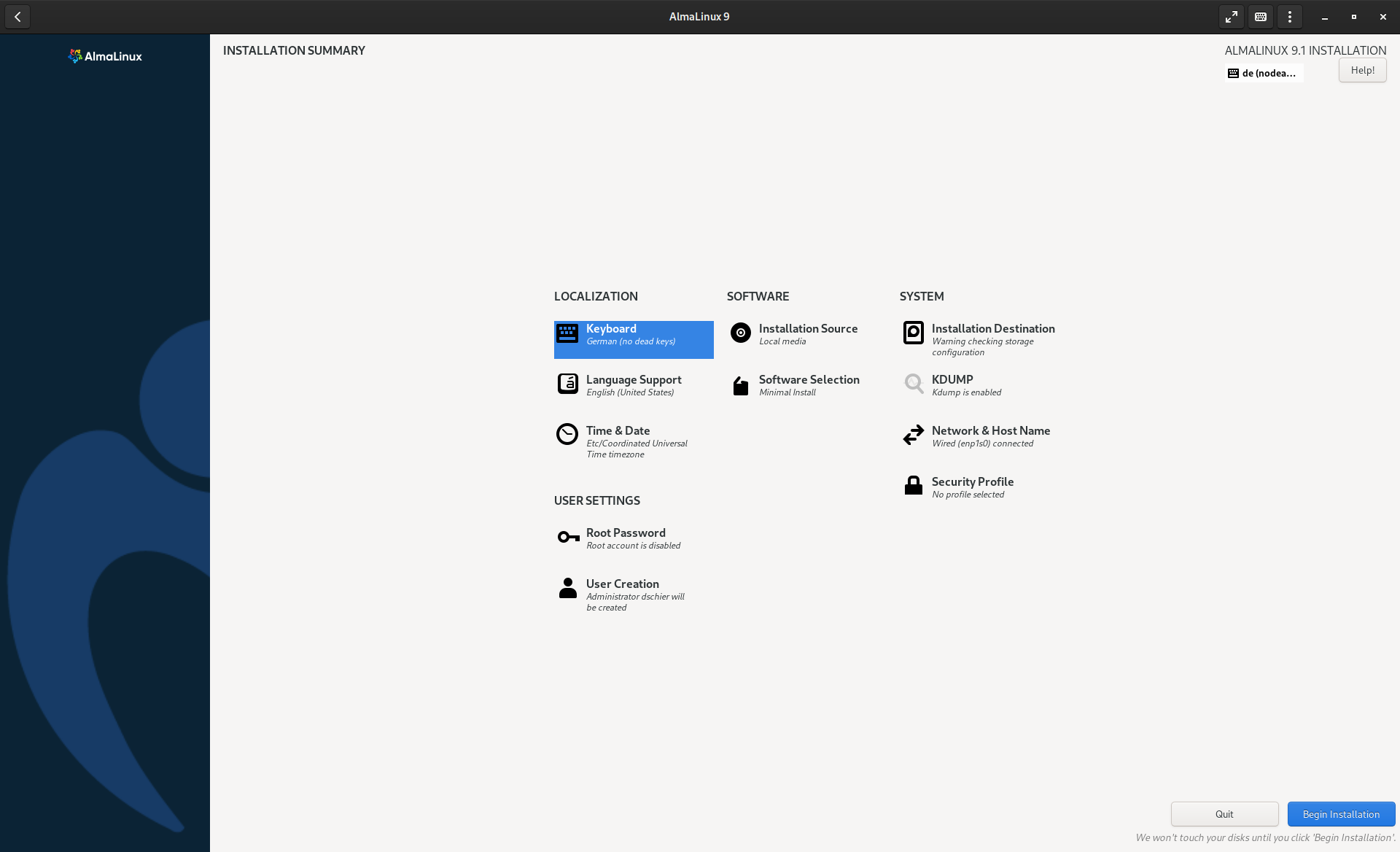

The deployment of k3s on AlmaLinux can be considered easy, once you got it running. But, there are some things you need to be aware. Let's have a look at the steps. In case you need a more detailed installation guide, please check out the "Spotlight - AlmaLinux" article.

Prerequisites

To deploy k3s on AlmaLinux, you will need a running AlmaLinux machine. You might start with a general purpose server instance, but there are some improvements that should be considered.

For the sake of this tutorial, I am spinning up an AlmaLinux 9.1 machine in GNOME Boxes. But, you can also check out the Home Server deployment or use some real hardware.

Resources

k3s has a very small footprint. Anyway, we want to deploy things afterward, which will require resources. You might calculate this on your own, but for a small home environment or development machine I recommend:

- CPU: 2 cores

- RAM: 2 GB

- Disk: 30 GB

Hostname

k3s has some issues, when running in a host called "localhost". Therefore you can change the name in the installer via the Network configuration or after the setup.

# Change hostname

$ sudo hostnamectl set-hostname k3s01Partitions

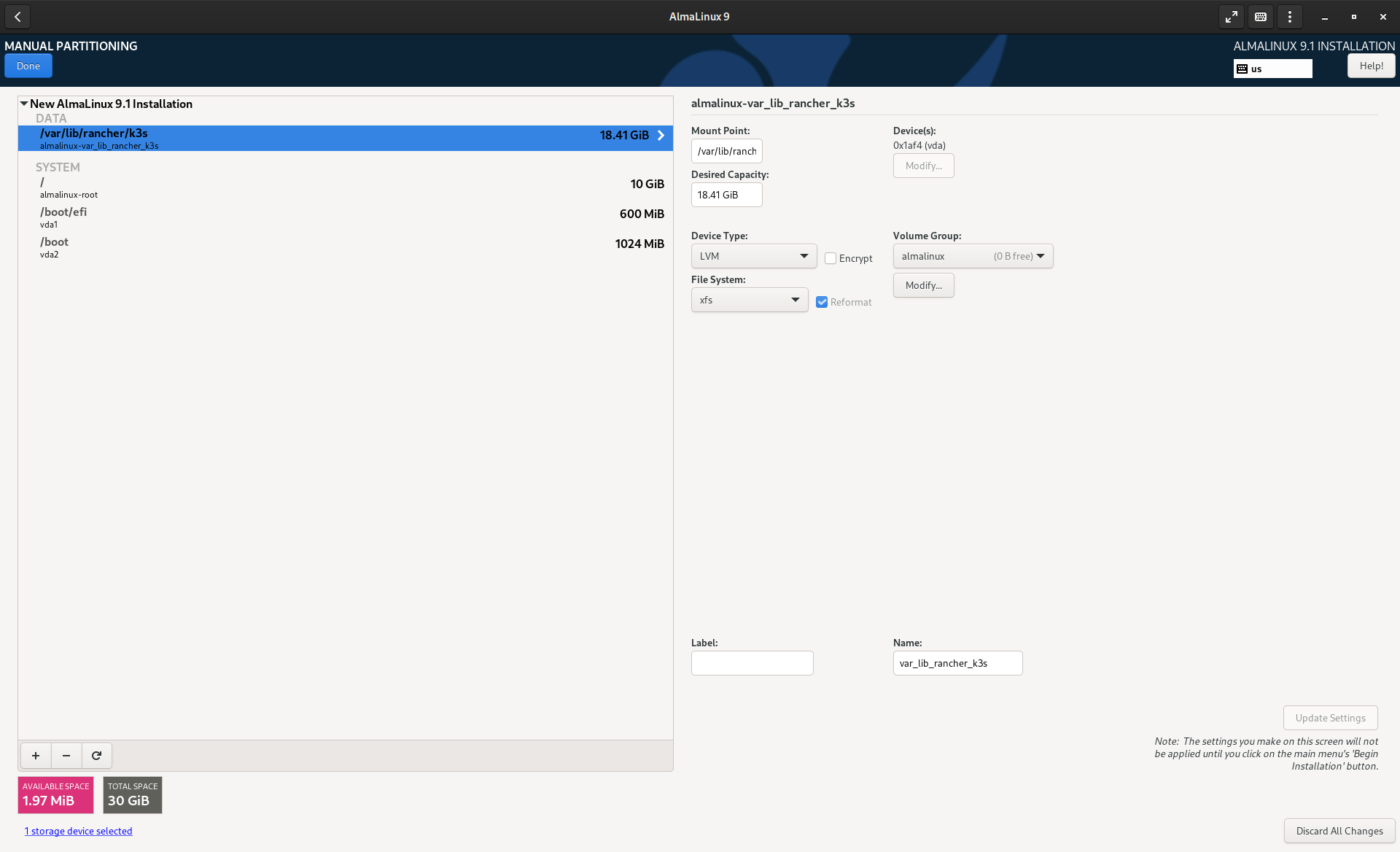

During the installation process, you can customize your partition scheme.

Kubernetes does not work very well with SWAP. There are some discussions and support was introduced with Kubernetes v1.22. But, it is still a good idea to disable SWAP.

k3s supports local storage. Meaning, that you can create persistent volumes, which will be stored in /var/lib/rancher/k3s. This also the place where all variable data for k3s is stored. To avoid a full root disk and allocate sufficient storage for your demand, creating a dedicated partition is recommended.

Firewall

AlmaLinux comes with firewalld pre-installed and enabled. In general, this is a good idea and works well. In case of k3s, there are some flaws which can lead to conflicts with internal networks of Kubernetes.

Therefore, it is recommended to disable and mask firewalld after the installation via:

# mask firewalld

$ sudo systemctl mask firewalld.server

# reboot

$ sudo systemctl rebootI am currently fiddling out some proper firewalld configuration to avoid this step. In case you are having something sufficient, I would appreciate some hints.

Updates

Always do updates after a fresh setup to fix bugs, mitigate security issues and maximize compatibility with 3rd party tools.

# Update system

$ sudo dnf update

# Reboot afterwards

$ sudo systemctl rebootInstallation

After the preparation of an AlmaLinux machine, the provisioning of k3s will even easier. You just need to run a single command.

# Install k3s via script

$ curl -sfL https://get.k3s.io | sh -But wait, isn't curl | bash considered harmful? Well, yes and no. The problem is not that the process itself is harmful. It is more about: "You don't know what's going on and can go wrong.". The "proper" way of doing something like this would be:

# Download the script

$ curl https://get.k3s.io > k3s_install.sh

# Read what's going on

$ vi k3s_install.sh

# Execute the script

$ sudo sh k3s_install.sh

If you do this, you will learn that the script will install some additional packages, download the k3s binary, create some symlinks, provide service files in /etc/systemd/system/, write some config to /etc/rancher/k3s/ and start the whole thing at the end.

In any case, after the execution, you will be able to check if your deployment is working.

# Check if the service is running

$ systemctl status k3s.service

# Use the k3s config check

$ k3s config-check

# Execute a kubectl command to interact with the machine

$ kubectl get nodesYou might run into two situations here:

- You are executing

kubectlas root, which will result in acommand not found. This is due to the fact, that the root user does not have/usr/local/binin its$PATHvariable. Easy enough, you can run/usr/local/bin/kubectlinstead. Same for thek3scommands. - When running the commands as user, you might face the situation that

/etc/rancher/k3s/k3s.yamlis not readable. This can be fixed by a simplesudo chmod 0644 /etc/rancher/k3s/k3s.yaml.

After this small setup, let's see what we can do with it.

First steps

Now we are having a Kubernetes instance. One might end this guide here, but running Kubernetes for the sake of running it? Let's do two more things.

Workstation

You might want to reach the cluster from your workstation. Kubernetes is designed to be used via an API. We already did this above, when running the kubectl command. Kubectl is the client, that connects to a given instance and performing API requests. This means, you can do the same commands over the network.

To do so, you need to install the Kubectl binary to your own workstation. For Linux, this super trivial.

# Download kubectl

$ curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectlThis will download the needed binary, that can be executed.

# execute directly from directory

./kubectl --versionIf you want to make it available for your user or even all users, you can move the file to a proper directory.

# For local user only

$ mv kubectl ~/.local/bin/kubectl

# For all users

$ sudo mv kubectl /usr/local/bin/kubectlAfterward, you can run the command as all other commands.

# Execute kubectl

$ kubectl --versionBut, you also want to connect to your freshly deployed Kubernetes/k3s. For this, you will need a config file (namely kubeconfig) from the server. You need to grab this from your running k3s instance. Just copy/paste the file from /etc/rancher/k3s/k3s.yaml and store it on your local machine.

Afterward, you need to edit the file and enter the IP address in it.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: "..."

server: https://INSTANCE_IP_ADDRESS:6443

...Now, you can use this file to connect to your Kubernetes instance from your workstation.

# kubectl

$ kubectl --kubeconfig="path/to/k3s.yaml" kubectl get nodesFirst deployment

Deployment done, connection done. You might want to deploy something, right? I already published the "Kubernetes - Getting Started" article for more details. For now, we can deploy something really simple. I think, a deployment (web server), a service and an ingress object will fulfill the demand to test the deployment. I have an example over at GitHub.

Just create a new YAML file with the below content.

---

apiVersion: "v1"

kind: "Namespace"

metadata:

name: "template"

---

apiVersion: "apps/v1"

kind: "Deployment"

metadata:

name: "template-deployment"

namespace: "template"

spec:

selector:

matchLabels:

component: "web"

replicas: 1

template:

metadata:

labels:

component: "web"

spec:

containers:

- name: "template"

image: "docker.io/whiletruedoio/template:latest"

resources:

limits:

memory: "512Mi"

cpu: "250m"

ports:

- containerPort: 80

---

apiVersion: "v1"

kind: "Service"

metadata:

name: "template-service"

namespace: "template"

spec:

type: "ClusterIP"

selector:

component: "web"

ports:

- port: 80

targetPort: 80

---

apiVersion: "networking.k8s.io/v1"

kind: "Ingress"

metadata:

name: "template-ingress"

namespace: "template"

spec:

rules:

- http:

paths:

- pathType: "Prefix"

path: "/"

backend:

service:

name: "template-service"

port:

number: 80

...This can be applied with a single command:

# apply the template.yaml

$ kubectl --kubeconfig k3s.yaml apply -f template.yaml

# Check the deployment

$ kubectl --kubeconfig k3s.yaml -n template get allYou can also point your browser to http://INSTANCE_IP_ADDRESS and see the website in action.

Automation

Automating the deployment of k3s would be awesome, right? I mean, who wants to take care and remember of firewall settings, fetching files and running scripts in changing versions?

Well, I am on it, but it is not done. You can find an Ansible role on GitHub in the whiletruedoio.general collection. Be aware, that it might be moved to a whiletruedoio.container collection, soon'ish.

Docs & Links

I also gathered some more links and docs, that might be helpful to get started with Kubernetes and k3s.

Conclusion

Well, well, we are having some Kubernetes running, now. There is so much more stuff that is possible now. Running a high-available cluster might be a thing, deploying everything on a bunch of Raspberry Pi or creating deployments for our home lab are options we might tackle soon.

But, before digging into this, I am thrilled to hear what you are expecting from Kubernetes. Are there some special deployments you are interested in? Scenarios that you face today? Let me know! :)